Scaling for VOLUME with Frontity & Cloudflare

Moving to a headless model for WordPress has its obvious advantages - freedom for designers to design as they please and for developers to make multiple modular and decoupled apps & sites without having to plan everything out from the beginning.

However, what really has me interested is the ability to scale without breaking the bank.

With the essential elements of the site being static JS/CSS - cacheability is easy. Much of the WordPress functionality that previously required memory/cpu intensive PHP (like admin-ajax), can be done with JS within the browser - so less roundtrips to Apache or PHP-FPM for each user.

The only truly dynamic part of the site (as far as the server goes) are the REST API endpoints. But even they can be cached as needed.

I wanted to put headless scalability to the test with Frontity, the awesome open-source React theme framework for WordPress that this website - 403page.com - is built on.

The hardware stack

There's nothing fancy here. I have 2 entry-level virtual instances. The first instance runs nginx with phpfpm to process PHP, with its own cache configured to flush either every 24 hours or when a new post/page is made (among some other neat mods which I won't go into here). This is where my WordPress code lives and operates as the backend under a subdomain of 403page.com.

The second runs node.js and contains the Frontity theme code which calls the content from the first instance over the REST API. This is what generates the JS & CSS to create the frontend that you're looking at right now.

Both of these could easily live on a single VM instance, but I liked separating them because I wanted to test a truly decoupled setup.

Caching at scale

Enter Cloudflare, with over 180+ server locations around the globe to serve a headless site for traffic at scale. While I do have a paid plan with them (mostly for workers which are AWESOME) - I decided to test how scaling would work on their free tier.

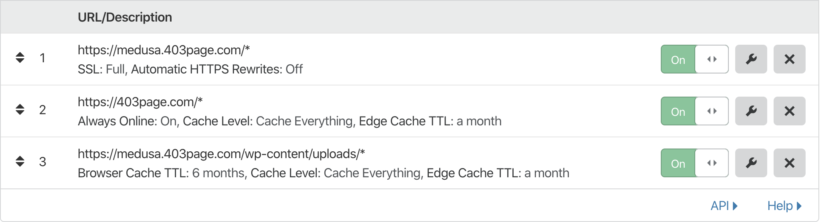

I put both the main domain (403page.com) and the REST (medusa.403page.com) subdomain, behind the Cloudflare CDN. I setup a couple of page rules for good measure like so:

You'll notice I don't have a page rule for the REST API endpoint. This was an oversight at first - but it seemed not to make a difference to performance in my case so ¯\_(ツ)_/¯.

Putting it to the test

I used Loader.io to perform testing so far, though I plan to deploy a fully-fledged load test with Blazemeter soon. It's worth noting that this kind of testing is ideal for a blog or news site. I would expect an ecommerce site to behave differently - though to still far outperform traditional WordPress.

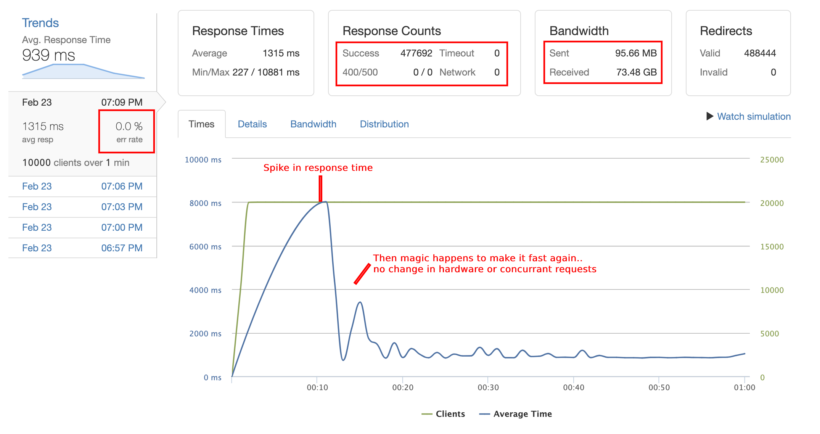

I configured the test for 10,000 hits per second to the homepage for 60 seconds.

For the first 10 seconds - the average response time was 8 seconds - which is pretty bad. It seems to be a phenomenon inherent in Cloudflare's load-balancing algorithm (or something else more obvious I'm too dense to have figured out yet).

After that first 10 seconds though, something magical happened and things really kicked into gear. The response time dropped to around the 1 second mark:

Within one minute - the site had loaded successfully 477,692 times, serving a whopping 73GB of data with a ZERO error rate. The average response time, including the dire initial 10 seconds was 1.315 seconds.

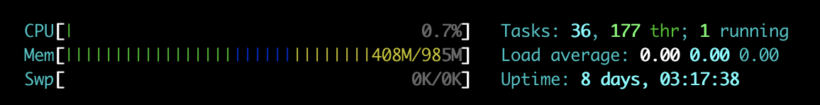

While this was happening - here's what HTOP looked like on the node.js instance:

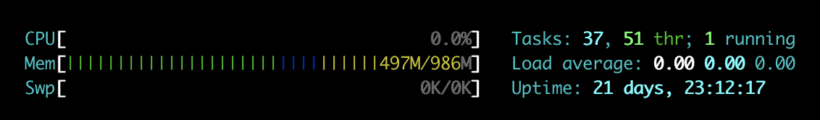

And here's what HTOP looked like on the nginx instance:

Cloudflare is effectively hosting the entire site, only updating the REST API cache shortly after a new post comes along.

The biggest load the origin server is ever going to see is when authors and editors are adding content - and when the REST API endpoint cache expires and needs to revalidate.

With that kind of load, you can run the origin server on pretty modest hardware, even a Raspberry Pi! (not that I recommend that - you just could).

In effect, for a site like this one - hardware does not matter.

In context...

In 2019, en.wikipedia.org is estimated to have had 1,229,282,645 visitors per month. This equates to 28,456 per minute. For 60 seconds, Frontity on Cloudflare successfully served 477,692 visitors.

The above test is equal to the traffic of 16x wikipedia's!

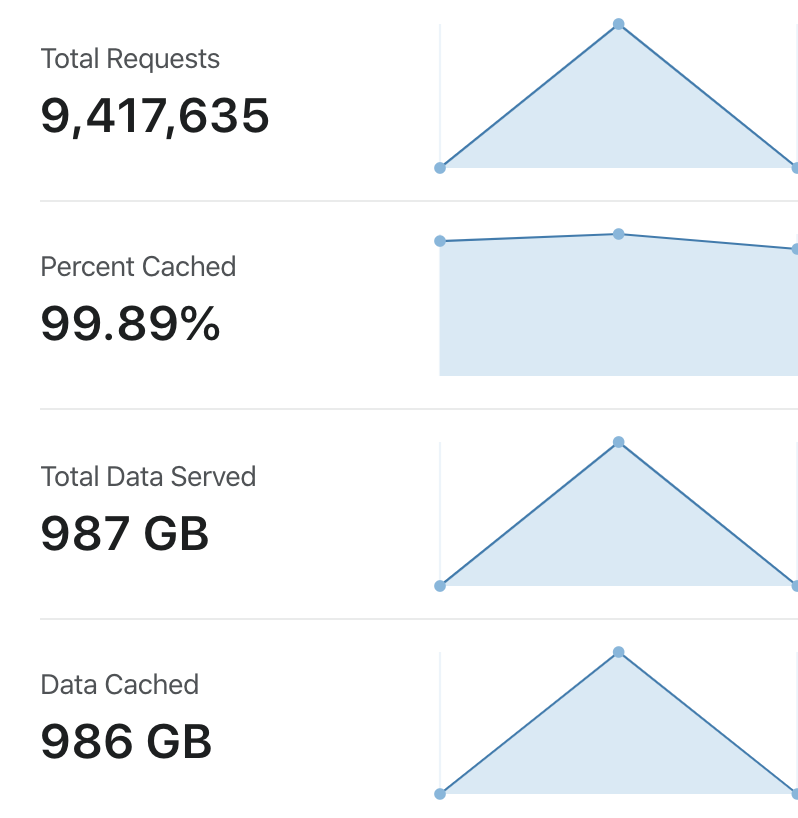

I actually ran multiple tests in the same evening. Here's a breakdown of how much data was served without breaking a sweat:

978GB served throughout the testing in less than a single evening, only a relatively minuscule 1GB of which came from the origin server (99.89% cached).

A blog or news-type site is just about to the best possible scenario to have for a test like this.

I have no doubt that I can scale for even bigger traffic on the same hardware - and for a far more prolonged period (10,000 clients p/s for 60 seconds is the most you can do on a free plan and I'm a cheapskate).

I'm very excited to do further testing with far more traffic, for far longer and with more specific on-site actions but if this test has taught me anything it's that I know I'll be using Frontity on large-scale, high-traffic projects.